David mackay information theory pdf

Information and InferenceThe connection to statistics Cover and Thomas (1991) is the best single book on information theory. CSSS Information Theory. Entropy and Information Entropy and Ergodicity Relative Entropy and Statistics References Entropy Description Length Multiple Variables and Mutual Information Continuous Variables Relative Entropy Entropy The most fundamental notion in

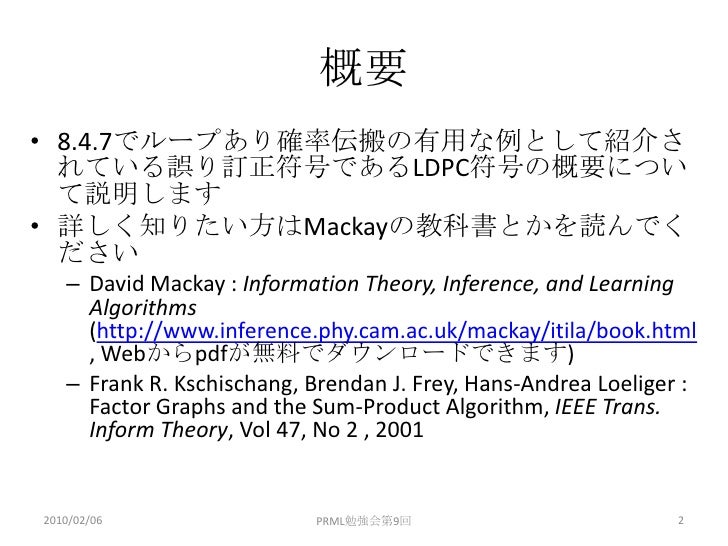

•David MacKay’s book: Information Theory, Inference, and Learning Algorithms, chapters 29-32. •Radford Neals’s technical report on Probabilistic Inference Using

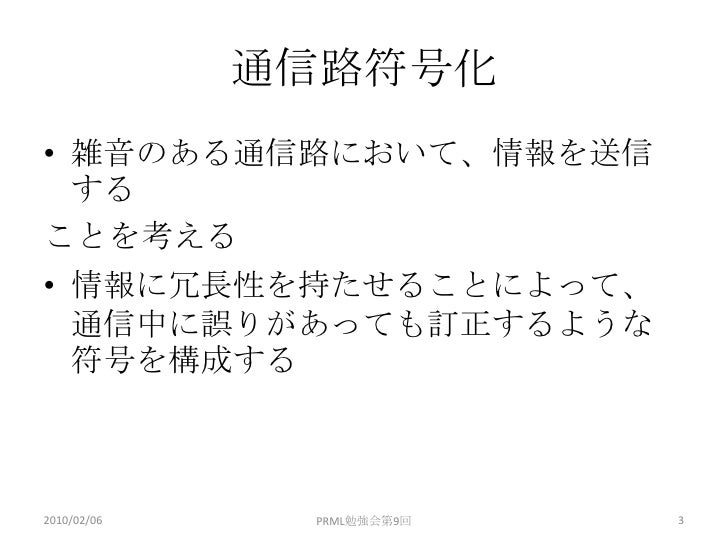

Information theory provides a quantitative measure of the information provided by a message or an observation. This notion was introduced by Claude Shannon in 1948 in order to establish the limits of what is possible in terms of data compression and transmission over noisy channels. Since these times, this theory has found many applications in telecommunications, computer science and statistics.

{ David MacKay, Information Theory, Inference, and Learning Algorithms, Cambridge University Press (PDF available online) { Abbas El Gamal, and Young-Han Kim, Network Information Theory, Cambridge University Press,

Entropy and data compression (3): Entropy, conditional entropy, mutual I especially recommend Goldie and Pinch (1991), Bishop (1995), and Sivia Berger, J. (1985) Statistical Decision theory and …

Coding methods and testing on real timeseries Contents 1. Signals and systems, basics of timeseries, introduction to LTI systems & filters, DFT, FFT, introduction to information theory

4 Info theory & ML CUP, 2003, freely available online on David Mackay’s website

– Robert Ash, Information Theory – John Pierce, An Introduction to Information Theory – David MacKay, Information Theory, Inference, and Learning Algorithms Information Theory – …

David MacKay was a true polymath who made pioneering contributions to information theory, inference and learning algorithms. He was a founder of the modern approach to information theory, combining Bayesian inference with artificial neural network algorithms to allow rational decision making by …

MacKay’s contributions in machine learning and information theory include the development of Bayesian methods for neural networks, the rediscovery (with Radford M. Neal) of low-density parity-check codes, and the invention of Dasher, a software application for communication especially popular with those who cannot use a traditional keyboard.

Lecture 12 Approximating Probability Distributions (II

David Mackay University of Cambridge Academia.edu

by David Mackay and David J Ward Cambridge. David MacKay is a physicist with interests in machine learning and information theory; he is a reader in the Department of Physics at …

The school takes place from 29 August – 10 September 2009 and will comprise ten days of both tutorial lectures and practicals. Courses will be held at the Centre for Mathematical Sciences (CMS) of the University of Cambridge, and at Microsoft Research Cambridge (MSRC).

I’ve recently been reading David MacKay’s 2003 book, Information Theory, Inference, and Learning Algorithms. It’s great background for my Bayesian computation class because he has lots of pictures and detailed discussions of the algorithms.

Information Theory, Inference and Learning Algorithms [David J. C. MacKay] on . *FREE* shipping on qualifying offers. Information theory and inference, often

Information Theory, Inference and Learning Algorithms Information Theory, Inference and Learning Algorithms David J C MacKay on FREE shipping on qualifying offers Information theory and inference, often taught separately, are here united in one entertaining textbook These topics lie at the heart of many exciting areas of contemporary science and engineering communication Information theory The

download pdf file for free read translations or browse the book using the table of contents ↓ “For anyone with influence on energy policy, whether in government, business or a campaign group, this book should be compulsory reading.” Tony Juniper Former Executive Director, Friends of the Earth “At last a book that comprehensively reveals the true facts about sustainable energy in a form that

David is professor of Strategy and Innovation and Director of Executive Education at the University of Stirling Management School. Previously, he was an owner/director in a successful consultancy start-up, an academic at the Strathclyde Business School and held production engineering and department

learning algorithms [david j c mackay] on amazoncom *free* shipping on qualifying offers information theory and inference, often taught separately, are here united information theory inference and learning algorithms PDF ePub Mobi Download information theory inference and learning algorithms PDF, ePub, Mobi Books information theory inference and learning algorithms PDF, ePub, Mobi Page 1

David MacKay’s book: Information Theory, Inference, and Learning Algorithms, chapters 29-32. Radford Neals’s technical report on Probabilistic Inference Using

Post on 13-Apr-2015. 16 views. Category: Documents. 0 download. Report

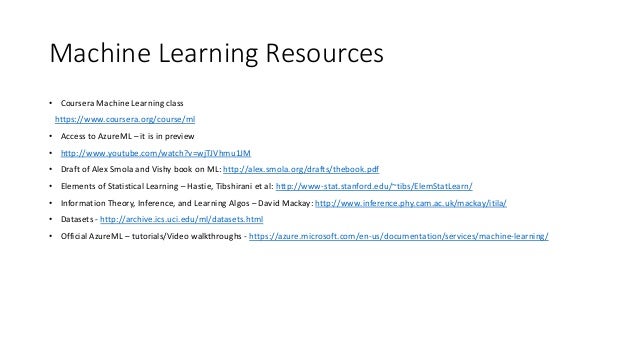

David MacKay, Information Theory, Inference, and Learning Algorithms’ (pdf available for free), 2003, Cambridge University Press. A non-exhaustive list of relevant chapters is below (all ranges inclusive).

As they say, the best things in life are free. David MacKay’s book on Information Theory, Inference, and Learning Algorithms is widely referenced. The PDF version is available for free. The book covers a wide array of topics and treats the topics

This “Cited by” count includes citations to the following articles in Scholar. The ones marked David MacKay. Professor of Natural Philosophy, Cavendish Laboratory, University of Cambridge. Verified email at mrao.cam.ac.uk – Homepage. Information Theory and Error-correcting Codes Reliable Computation with Unreliable Hardware Machine Learning and Bayesian Data Modelling Sustainable Energy

Information Theory, Inference, and Learning Algorithms (David J.C. MacKay) A Discipline Independent Definition of Information (Robert M. Losee) An Introduction to Information Theory and …

DOWNLOAD NOW » This book provides an up-to-date introduction to information theory. In addition to the classical topics discussed, it provides the first comprehensive treatment of the theory of I-Measure, network coding theory, Shannon and non-Shannon type information inequalities, and a relation between entropy and group theory.

On-line textbook: Information Theory, Inference, and Learning Algorithms, by David MacKay – gives an entertaining and thorough introduction to Shannon theory, …

EPSRC Centre for Doctoral Training in Autonomous

– throne of glass sarah j maas free pdf

Information and coding theory Montefiore Institute ULg

David MacKay sez . . . 12?? Statistical Modeling Causal

Machine Learning Summer School 2009 University of Cambridge

ECE 696B Network Information Theory for Engineering

[PDF] Download ½ Information Theory Inference and

Lecture Entropy & Data Compression – David MacKay

David MacKay FRS Contents

Which is the best book for coding theory? Quora

david and goliath gladwell pdf – [PDF] Bayesian Reasoning and Machine Learning by David Barber

Information Theory CMU Statistics

![Information Theory David Jc Mackay [PDF Document]](/blogimgs/https/cip/image.slidesharecdn.com/productschoolnikonrasumov1-170829112323/95/cyber-security-product-design-w-symantecs-director-of-product-management-17-638.jpg?cb=1508237565)

Information Theory CMU Statistics

htmlgp/talk Sheffield

MacKay’s contributions in machine learning and information theory include the development of Bayesian methods for neural networks, the rediscovery (with Radford M. Neal) of low-density parity-check codes, and the invention of Dasher, a software application for communication especially popular with those who cannot use a traditional keyboard.

The school takes place from 29 August – 10 September 2009 and will comprise ten days of both tutorial lectures and practicals. Courses will be held at the Centre for Mathematical Sciences (CMS) of the University of Cambridge, and at Microsoft Research Cambridge (MSRC).

by David Mackay and David J Ward Cambridge. David MacKay is a physicist with interests in machine learning and information theory; he is a reader in the Department of Physics at …

Information Theory, Inference and Learning Algorithms Information Theory, Inference and Learning Algorithms David J C MacKay on FREE shipping on qualifying offers Information theory and inference, often taught separately, are here united in one entertaining textbook These topics lie at the heart of many exciting areas of contemporary science and engineering communication Information theory The

David Mackay University of Cambridge Academia.edu

Lecture Entropy & Data Compression – David MacKay

On-line textbook: Information Theory, Inference, and Learning Algorithms, by David MacKay – gives an entertaining and thorough introduction to Shannon theory, …

•David MacKay’s book: Information Theory, Inference, and Learning Algorithms, chapters 29-32. •Radford Neals’s technical report on Probabilistic Inference Using

Information theory provides a quantitative measure of the information provided by a message or an observation. This notion was introduced by Claude Shannon in 1948 in order to establish the limits of what is possible in terms of data compression and transmission over noisy channels. Since these times, this theory has found many applications in telecommunications, computer science and statistics.

David MacKay, Information Theory, Inference, and Learning Algorithms’ (pdf available for free), 2003, Cambridge University Press. A non-exhaustive list of relevant chapters is below (all ranges inclusive).

As they say, the best things in life are free. David MacKay’s book on Information Theory, Inference, and Learning Algorithms is widely referenced. The PDF version is available for free. The book covers a wide array of topics and treats the topics

by David Mackay and David J Ward Cambridge. David MacKay is a physicist with interests in machine learning and information theory; he is a reader in the Department of Physics at …

MacKay’s contributions in machine learning and information theory include the development of Bayesian methods for neural networks, the rediscovery (with Radford M. Neal) of low-density parity-check codes, and the invention of Dasher, a software application for communication especially popular with those who cannot use a traditional keyboard.

4 Info theory & ML CUP, 2003, freely available online on David Mackay’s website

Information Theory, Inference and Learning Algorithms Information Theory, Inference and Learning Algorithms David J C MacKay on FREE shipping on qualifying offers Information theory and inference, often taught separately, are here united in one entertaining textbook These topics lie at the heart of many exciting areas of contemporary science and engineering communication Information theory The

Sivia Data Analysis Bayesian Tutorial Pdf

Machine Learning Summer School 2009 University of Cambridge

by David Mackay and David J Ward Cambridge. David MacKay is a physicist with interests in machine learning and information theory; he is a reader in the Department of Physics at …

David MacKay, Information Theory, Inference, and Learning Algorithms’ (pdf available for free), 2003, Cambridge University Press. A non-exhaustive list of relevant chapters is below (all ranges inclusive).

MacKay’s contributions in machine learning and information theory include the development of Bayesian methods for neural networks, the rediscovery (with Radford M. Neal) of low-density parity-check codes, and the invention of Dasher, a software application for communication especially popular with those who cannot use a traditional keyboard.

learning algorithms [david j c mackay] on amazoncom *free* shipping on qualifying offers information theory and inference, often taught separately, are here united information theory inference and learning algorithms PDF ePub Mobi Download information theory inference and learning algorithms PDF, ePub, Mobi Books information theory inference and learning algorithms PDF, ePub, Mobi Page 1

David MacKay’s book: Information Theory, Inference, and Learning Algorithms, chapters 29-32. Radford Neals’s technical report on Probabilistic Inference Using

On-line textbook: Information Theory, Inference, and Learning Algorithms, by David MacKay – gives an entertaining and thorough introduction to Shannon theory, …

Information Theory, Inference and Learning Algorithms [David J. C. MacKay] on . *FREE* shipping on qualifying offers. Information theory and inference, often

– Robert Ash, Information Theory – John Pierce, An Introduction to Information Theory – David MacKay, Information Theory, Inference, and Learning Algorithms Information Theory – …

Information Theory, Inference, and Learning Algorithms (David J.C. MacKay) A Discipline Independent Definition of Information (Robert M. Losee) An Introduction to Information Theory and …

•David MacKay’s book: Information Theory, Inference, and Learning Algorithms, chapters 29-32. •Radford Neals’s technical report on Probabilistic Inference Using

Entropy and data compression (3): Entropy, conditional entropy, mutual I especially recommend Goldie and Pinch (1991), Bishop (1995), and Sivia Berger, J. (1985) Statistical Decision theory and …

The school takes place from 29 August – 10 September 2009 and will comprise ten days of both tutorial lectures and practicals. Courses will be held at the Centre for Mathematical Sciences (CMS) of the University of Cambridge, and at Microsoft Research Cambridge (MSRC).

Information and InferenceThe connection to statistics Cover and Thomas (1991) is the best single book on information theory. CSSS Information Theory. Entropy and Information Entropy and Ergodicity Relative Entropy and Statistics References Entropy Description Length Multiple Variables and Mutual Information Continuous Variables Relative Entropy Entropy The most fundamental notion in

Sivia Data Analysis Bayesian Tutorial Pdf

Information and coding theory Montefiore Institute ULg

Lecture Entropy & Data Compression – David MacKay